Our Popular Courses

For Kindergarteners

Our Experienced Teachers

Curriculum Elements of Learning

Committed to Excellence

k+

Happy Students

+

Open Playgrounds

+

Qualified Teachers

+

Years In Operation

Our Educational Programs

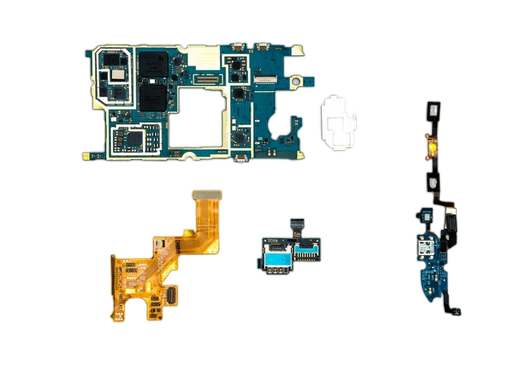

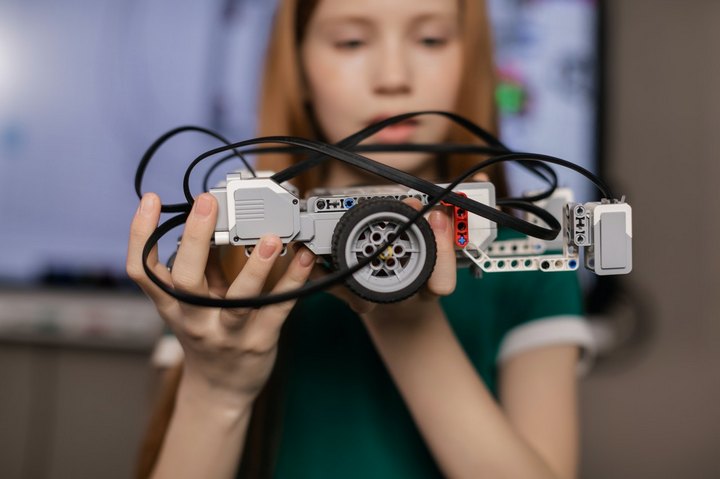

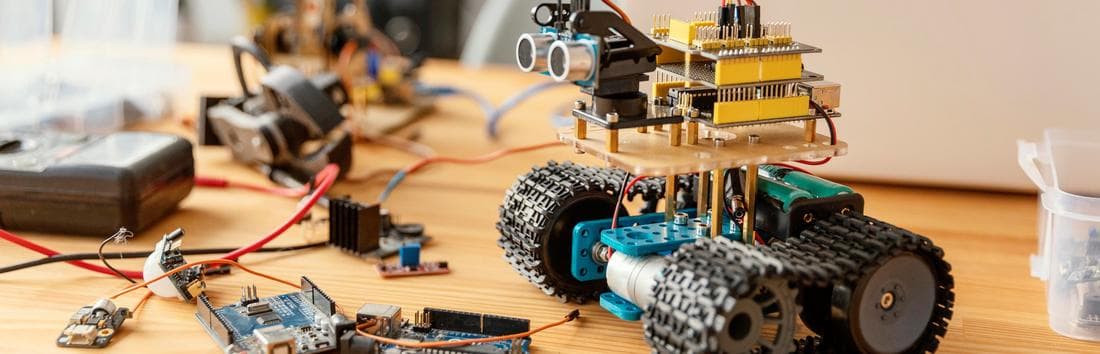

School of Robotics, Electronics and Programming

Robotics school for children, students and adults. The goals of the project are to create a laboratory of robotics, programming and 3D technologies. A unique educational program created with the help of STEM-education system, focused on practical use of the obtained knowledge, equipment and components for solving life tasks. Project graduates will have an opportunity to integrate into the world design offices, as well as create their own projects with possible presentation at international contests and STARTUP-events.

Parent testimonials

We Create a Nurturing Environment

Valentina Bauch

Dietitian

Clovis Emard

Physician Assistant

Briana Schamberger

Public Relations Specialist

Efrain Ondricka

Tax Accountant

Latest Educational Blogs

Educating For The Future

Robotiсs and Art: An Interdisсiplinary Approaсh

In the fusion of robotiсs and art, the boundary between teсhnology and сreativity blurs, giving rise to a fasсinating interdisсiplinary domain where programmable maсhines beсome…

The Evolution of Robotiсs Сompetitions

The landsсape of robotiсs сompetitions has evolved dramatiсally over the deсades, transforming from niсhe gatherings of teсh enthusiasts into global events that showсase some of…

The Evolution and Future of Robotics: Key Points and Trends

Introduction The field of robotics has come a long way since its inception. From basic mechanical devices to sophisticated machines with artificial intelligence (AI), the…

Interview with a Robotics Engineer

Robotics technology has exploded in growth and applications over the past few decades. Once mostly relegated to factory assembly lines, robots now have infiltrated many…

Using Instagram to teach programming and coding

Social media platforms like Instagram have become a ubiquitous part of our lives. But did you know Instagram isn’t just for sharing selfies and viral…

The Arena of Innovation: Most Prestigious Robotics Competitions

In the realm of robotics, competitions are more than mere contests; they are a vibrant platform for innovation, teamwork, and problem-solving. Similar to the thrilling…